The AI Act and the challenge of navigating a rapidly evolving technology

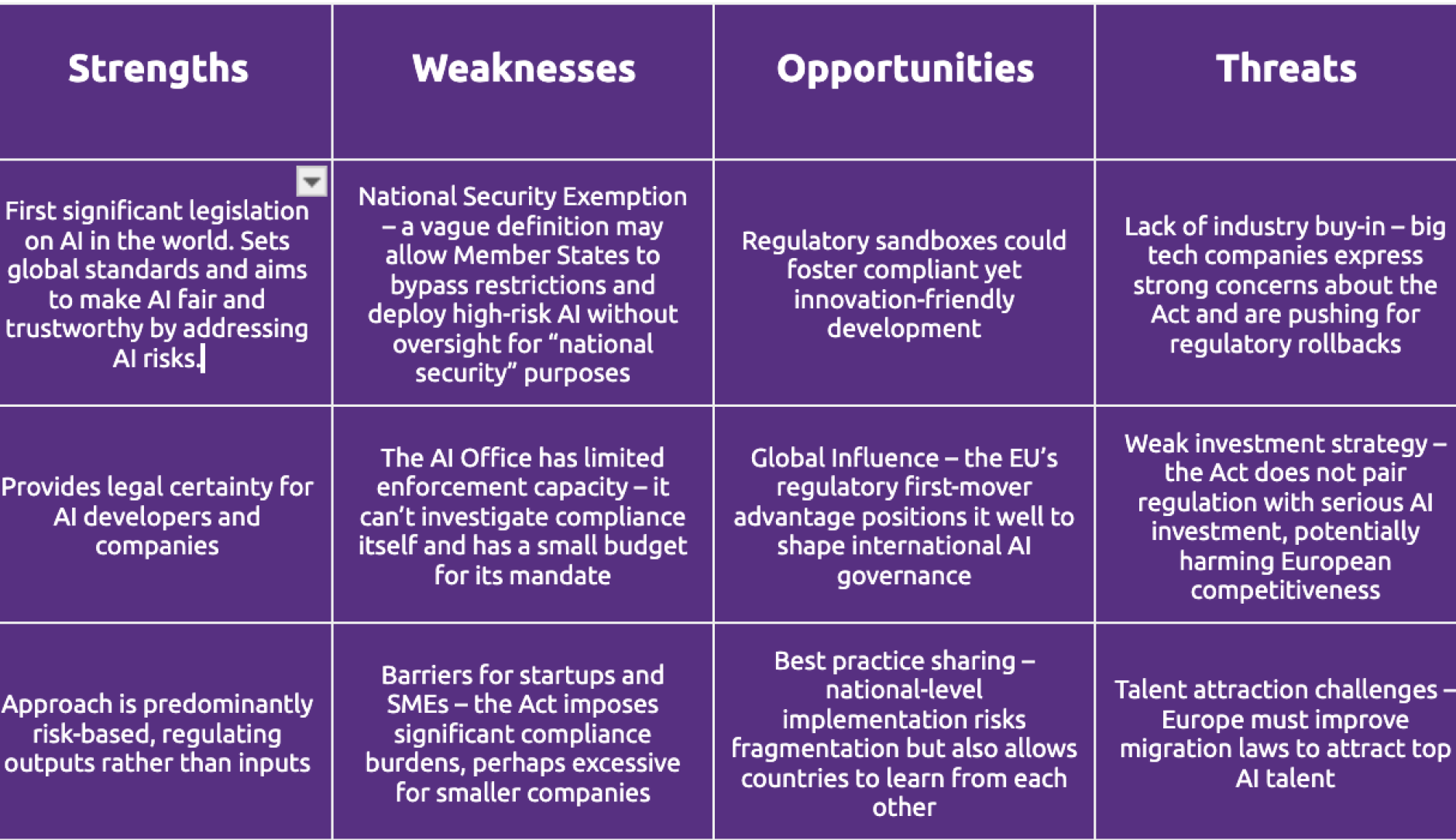

As the EU and the new US government appear set to argue over tech regulation, Volt takes a look at the strengths, weaknesses, opportunities and threats of a key piece of European digital legislation: the Artificial Intelligence Act.

No longer a distant legislative concept, the AI Act has arrived. In February, the first set of rules came into effect, setting AI literacy requirements for employees of providers and deployers, and prohibiting certain AI use cases. Further regulations will roll out in phases, culminating in full implementation by August 2026.

In this piece we have attempted to critically assess the AI Act, a challenging exercise mirroring some of the difficulties encountered by lawmakers when drafting the legislation. Specifically:

Legislation is always a balancing act. Many of the decisions that led to the final version of the AI Act were tradeoffs, including the evergreen discussion on the extent to which regulation stifles innovation.

The Act’s impact varies depending on who you ask. What a big tech company sees as an undue burden, an SME or a consumer might view as an essential safeguard.

The recent development of Artificial intelligence has been extremely fast, with applications in a vast range of fields and with significant investment, making its regulation particularly challenging.

When the Act was originally announced in 2019, the goal was to reinforce customer safety when interacting with AI systems. The dominant systems back then were expert systems and relatively simple neural networks. When the Act was almost complete, ChatGPT arrived, and the turn towards the interaction of AI with society heavily influenced negotiations in 2022 and 2023.

The AI Act is still being finalised through secondary legislation. Codes of practice discussions are shaping fruitful grounds for implementation, and Member States are preparing bodies to be responsible for the implementation. This makes definitive judgements premature.

A strong start

In spite of these challenges, the AI Act has three main strengths:

Timing and acknowledgement of AI risks. As the first globally recognised and significant legislation on AI, the Act’s timing has enabled it to influence other countries with the standards it sets. For instance, South Korea, Brazil, Japan, California, Canada (and arguably also the UK) have either adopted, attempted to adopt, or are in the process of adopting AI regulation reminiscent of the EU AI Act. It is also the first legislation to recognise the risks of AI, together with an explicit effort to address them, ultimately aiming to increase its adoption.

Provision of legal certainty. The legislation (theoretically) reduces legal uncertainty for AI developers, investors and companies. As the leading law on AI in the European Union, it should integrate well with other EU digital laws.

A predominantly risk-based approach. The law regulates outputs of AI models, rather than inputs, enabling it to remain relevant (with some adaptations) even as AI models rapidly develop. The exceptions where inputs are regulated, such as compute thresholds for general purpose models with systemic risk and the prohibition of inferring ‘sensitive attributes’ when processing biometric data or untargeted facial recognition datasets, still appear appropriate.

Other notable strengths include the requirements for additional compliance measures for General Purpose AI models with systemic risks, and the significant sanctions for infringing the Act (up to 10% of global annual turnover), which should provide a good deterrent even for those with deep pockets.

The devil in the detail

Despite its merits, the AI Act has several weaknesses. The vagueness of some crucial definitions is concerning, in particular the use of the term ‘national security’. Currently, the Act does not apply to AI systems used by Member States for ‘national security’ purposes. Requested to clarify, the Commission employed the definition provided by the EU’s Court of Justice such that it ‘encompasses the prevention and punishment of activities capable of seriously destabilising the fundamental constitutional, political, economic or social structures of a country and, in particular, of directly threatening society, the population or the State itself, such as terrorist activities.’

We share some of the concerns of human rights groups that the imprecise nature of the definition could allow individual Member States to abuse the exemption and deploy prohibited or high-risk models that do not comply with the AI Act’s provisions. That could be particularly problematic in sensitive contexts such as biometric mass surveillance or migration.

Another significant weakness lies in implementation capacity. The EU’s AI Office, responsible for overseeing the Act, is a small team with a budget half the size of the UK’s AI Safety Institute. Furthermore, governance and enforcement are meant to be handled by national market surveillance authorities, meaning that the AI Office oversees enforcement without the right to investigate compliance itself.

The Act also fails to sufficiently support AI innovation for startups and SMEs. The chief architect and lead author of the AI Act, Gabriele Mazzini, acknowledges that not enough was done to distinguish between smaller and larger players, thereby failing to reduce the former’s financial and compliance burden. Although the DigitalSME Alliance stresses that ‘quickly available, directly applicable rules for implementation’ will make the difference, the measures to support SMEs may take too long to implement.

Other weaknesses include the missed opportunity to include energy usage disclosure requirements for AI systems, depending on voluntary application. Uncertainty over how the AI Act interacts with other European digital laws, such as the DSA, GDPR and DMA creates concerns that will need to be addressed. While the capacity of adequate enforcement at Member State level is unclear, the literacy requirements are meant for staff of AI providers, yet users may find it difficult to evaluate their privacy, ethical and fairness needs due to an incomplete understanding of the product.

Opportunities for Europe

The AI Act presents several opportunities if implemented wisely. Regulatory Sandboxes, set to be operational by August 2026, are tools Member States need to offer AI providers to test and validate their AI products under supervision. If implemented well, sandboxes could enable an innovation-friendly and unbureaucratic environment for responsible research. Spain is so far on track to deliver the first example. While there is much uncertainty about the implementation between the different countries, it is a prime opportunity for regulators and practitioners to strike a good balance between innovation and regulation.

The Act also positions the EU as a global leader in AI governance. It could leverage its legal expertise and experience to be influential in international coordination efforts on AI, such as through the AI Action Summits or UN initiatives.

Likewise, Member States have the chance to share best practices among themselves. The implementation of the Act will be led by individual Member States, and although this presents the risk of heterogeneous implementations that could make it harder for businesses to operate in different EU countries, it also provides the opportunity for countries to learn from each other.

Spain, for example, has created the EU’s first AI regulatory body, while in the Netherlands, more than twenty national supervisory authorities collaborated to provide advice to the Dutch government on the implementation of the AI Act. These are examples of national initiatives that, if successful, could be replicated by other Member States.

Additionally, by grouping smaller initiatives and Member State collaborations, investments can be streamlined to place higher bets, something that could be developed into a CERN-like organisation for AI. Compliance exemptions for open-source models could lower the burden on collaborative development and facilitate AI open-source solutions. The recently announced InvestAI initiative appears promising in this regard, offering a way to centralise resources and funding for AI advancements across the EU.

Self-interest or genuine concerns?

Without industry buy-in, even the most well-intentioned regulation risks falling flat. Some influential stakeholders, including big tech companies, have expressed concerns that sometimes border on hostility towards the AI Act and other EU digital laws, warning that they will make Europe fall behind in AI innovation.

Some relevant examples of this include Google, Apple and Meta delaying the launch of AI products in the EU, citing regulatory uncertainty. Following the statement by Ireland’s Data Protection Commission that Meta’s plan to use the content of European Facebook and Instagram users’ public posts to train AI models was not compliant with GDPR, Mark Zuckerberg and Meta’s Chief AI Scientist, Yann LeCun signed an open letter together with other European company’ leaders stating that Europe should decide between relaxing its GDPR rules or ‘continue to reject progress, contradict the ambitions of the single market and watch as the rest of the world builds on technologies that Europeans will not have access to’.

Mario Draghi’s report on The Future of European Competitiveness, meanwhile, suggests that the AI Act’s additional regulatory requirements for general-purpose AI models with systemic risk, combined with other existing regulatory barriers, might lead to ‘only larger companies (which are often non-EU based) having the financial capacity and incentives to bear the costs of complying, (whereas) young innovative tech companies may choose not to operate in the EU at all.’

Some analysts consider such concerns to be largely well-meant. Others, though, believe that big tech companies clearly have a conflict of interest, benefiting as they do from less regulation, and that they are deliberately shaping a narrative that AI regulations are killing innovation. Regardless, the problem remains that the AI Act appears to lack adequate buy-in from the industry. This could lead to threats to its enforcement, both from within Europe and from outside, with the Trump administration likely to be agreeable to requests for less tolerance of Europe’s regulation of American tech companies and the possible use of retaliation.

A further missed opportunity was to pair customer protection with serious investments. Even if the AI Act’s purpose was not to directly facilitate investments in European AI, it does not nearly satisfy the cries for more and targeted investment to satisfy the competitiveness goals. Volt advocates for tripling funding to Horizon Europe and dedicating part of the additional funding towards AI.

Being at the forefront of AI innovation will also require attracting top talent to Europe. Current migration laws should be improved, such as Volt MEP Damian Boeselager’s Blue Card proposals (Europe's answer to the American green card), the Europeanisation of the residence status, and a new initiative for so-called ‘talent pools’, a matching system for employers and job seekers in non-EU countries.

In conclusion

Since the Act's passing roughly one year ago, the AI field has developed rapidly and regulation has become an even more fundamental part of the debate. If anything, this underscores the importance of getting the Act right. Although time is scarce, threats can be turned into opportunities, and weaknesses into strengths. At Volt we will continue to have the important conversations around general-purpose AI, innovation, data, cooperation and all other relevant elements of AI and technology. If you’re interested in joining and helping to shape Europe’s future of AI, reach out!

Opinion article by Mario Giulio Bertorelli and Robert Praas. The authors would like to thank Matthias Buchorn, Klaus Pinhack, Eva van Rooijen, Eike Schultz, Wouter Van Der Wal, Basile Verhulst, and Yannick Wehr for their feedback and support.

______________

You and us share the same dream of a united, thriving Europe. It really means a lot to us when you make a donation, and if you would like to help us plan ahead with confidence, we thank you for your monthly contribution 💜